"""

@Author:张时贰

@Date:2022年11月16日

@CSDN:张时贰

@Blog:zhsher.cn

"""

import datetime

import json

import os

import re

from lxml import etree

import requests

'''

百度统计API文档:https://tongji.baidu.com/api/manual/

ACESS_TOKEN 与 REFRESH_TOKEN 申请,查看API文档或以下说明

申请 token 的方法:

1.在百度统计控制台点击数据管理开通数据并获取 `API Key` 与 `Secret Key`

2.登录百度账号,获取 `code`(一次性且10min有效) :http://openapi.baidu.com/oauth/2.0/authorize?response_type=code&client_id={CLIENT_ID}&redirect_uri=oob&scope=basic&display=popup

其中 `{CLIENT_ID}` 为API key

3.获取 `ACCESS_TOKEN` :http://openapi.baidu.com/oauth/2.0/token?grant_type=authorization_code&code={CODE}&client_id={CLIENT_ID}&client_secret={CLIENT_SECRET}&redirect_uri=oob

其中 `{CLIENT_ID}`填写您的API Key

`{CLIENT_SECRET}`填写您的Secret Key

`{CODE}`填写刚才拿到的CODE

如果你对文档不清楚如何拿到 token 可以借助此项目接口

'''

def baidu_get_token(API_Key, Secret_Key, CODE):

'''

获取百度token

:param API_Key: 百度账号API_Key

:param Secret_Key: 百度账号Secret_Key

:param CODE: 登录并访问 http://openapi.baidu.com/oauth/2.0/authorize?response_type=code&client_id={你的API_Key}&redirect_uri=oob&scope=basic&display=popup

:return: {'access_token': access_token, 'refresh_token': refresh_token}

'''

payload = {

"grant_type": "authorization_code",

"redirect_uri": "oob",

"code": f'{CODE}',

"client_id": f'{API_Key}',

"client_secret": f'{Secret_Key}',

}

r = requests.post ( 'http://openapi.baidu.com/oauth/2.0/token', params=payload )

getData = r.json ()

try:

access_token = getData[ 'access_token' ]

refresh_token = getData[ 'refresh_token' ]

print ( 'Acess_Token:' + '\n' + access_token )

print ( 'Refresh_Token:' + '\n' + refresh_token )

token = {'access_token': access_token, 'refresh_token': refresh_token}

return token

except Exception as e:

e = str ( e )

e = e + '获取失败,请保证code有效(十分钟有效期且仅能使用一次)'

return e

def baidu_refresh_token(API_Key, Secret_Key, refresh_token):

'''

通过 refresh_token 刷新

:param API_Key: 百度账号API_Key

:param Secret_Key: 百度账号Secret_Key

:param refresh_token: 百度账号refresh_token

:return: {'access_token': access_token, 'refresh_token': refresh_token}

'''

payload = {'grant_type': 'refresh_token',

'refresh_token': refresh_token,

'client_id': API_Key,

'client_secret': Secret_Key

}

r = requests.post ( 'http://openapi.baidu.com/oauth/2.0/token', params=payload )

token = r.json ()

try:

access_token = token[ 'access_token' ]

refresh_token = token[ 'refresh_token' ]

print ( "Token更新\nAcess_Token = " + access_token + "\nRefresh_Token = " + refresh_token )

token = {'access_token': access_token, 'refresh_token': refresh_token}

return token

except Exception as e:

e = str ( e )

return '错误信息:刷新后无' + e + '值 , 请检查 refresh_token 是否填写正确'

def getSiteList(access_token, domain):

'''

请求获取百度账号下所有的站点列表并处理得到自己博客的 site_id

:param access_token: 百度分析access_token

:param domain: 站点域名

:return: 构造 site_info 字典作为其它请求的 params

'''

payload = {'access_token': access_token}

r = requests.post ( 'https://openapi.baidu.com/rest/2.0/tongji/config/getSiteList', params=payload )

get_data = r.json ()

getData = get_data[ 'list' ]

now = datetime.datetime.now ().date ()

now = datetime.datetime.strftime ( now, '%Y%m%d' )

site_info = {}

for i in getData:

if i[ 'domain' ].__eq__ ( domain ):

site_info[ 'site_id' ] = i[ 'site_id' ]

site_info[ 'domain' ] = i[ 'domain' ]

site_info[ 'status' ] = i[ 'status' ]

site_info[ 'start_date' ] = i[ 'create_time' ]

site_info[ 'end_date' ] = now

return site_info

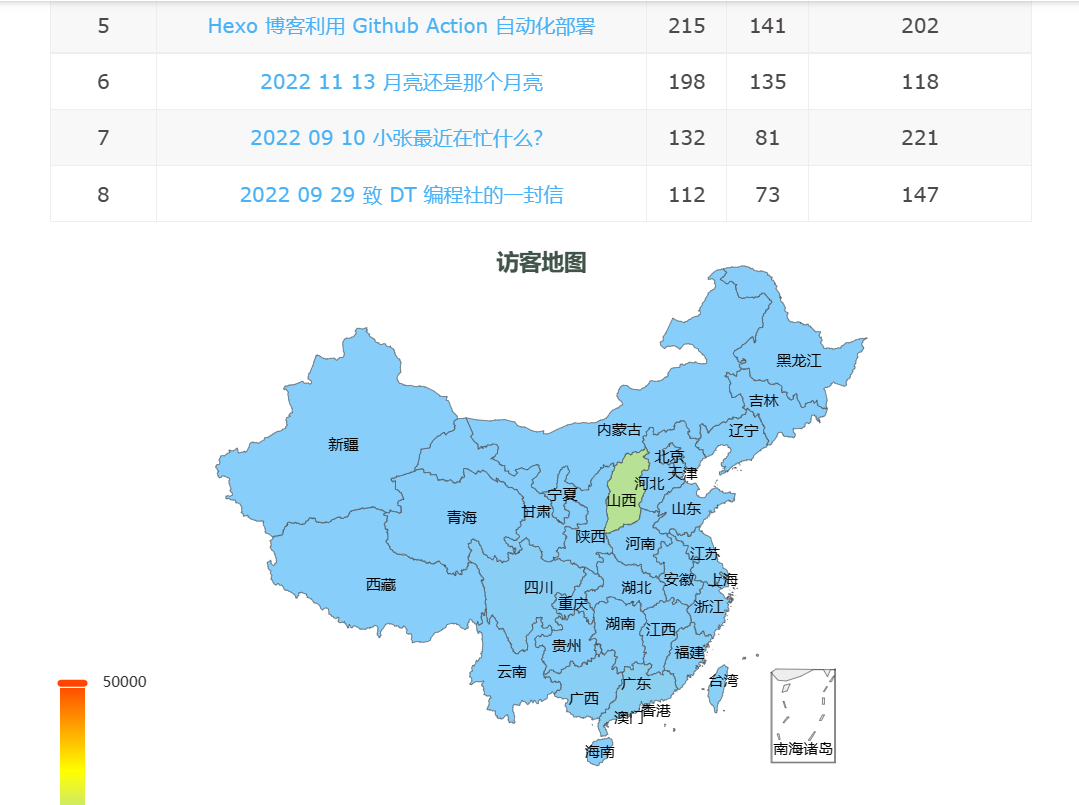

def get_hot_article(access_token, domain):

'''

获取热文统计

:param access_token: 百度分析access_token

:param domain: 站点域名

:return: 以pv排序返回文章标题、链接、pv、uv、平均时长

'''

site_info = getSiteList ( access_token, domain )

payload = {

'access_token': access_token,

'method': 'visit/toppage/a',

"metrics": "pv_count,visitor_count,average_stay_time",

}

payload.update ( site_info )

r = requests.post ( 'https://openapi.baidu.com/rest/2.0/tongji/report/getData', params=payload )

get_site_data = r.json ()

blog_general = {"timeSpan": get_site_data[ 'result' ][ 'timeSpan' ][ 0 ],

"total": get_site_data[ 'result' ][ 'total' ],

"sum_pv_count": get_site_data[ 'result' ][ 'sum' ][ 0 ][ 0 ],

"sum_visitor_count": get_site_data[ 'result' ][ 'sum' ][ 0 ][ 1 ],

"sum_average_stay_time": get_site_data[ 'result' ][ 'sum' ][ 0 ][ 2 ],

"top20_pv_count": get_site_data[ 'result' ][ 'pageSum' ][ 0 ][ 0 ],

"top20_visitor_count": get_site_data[ 'result' ][ 'pageSum' ][ 0 ][ 1 ],

"top20_average_stay_time": get_site_data[ 'result' ][ 'pageSum' ][ 0 ][ 2 ],

}

post_num = len ( get_site_data[ 'result' ][ 'items' ][ 0 ] )

index = 0

for i in range ( 0, post_num ):

if not re.match ( r'^https://' + site_info[ 'domain' ] + '/post/*',

get_site_data[ 'result' ][ 'items' ][ 0 ][ i - index ][ 0 ][ 'name' ] ):

del get_site_data[ 'result' ][ 'items' ][ 0 ][ i - index ]

del get_site_data[ 'result' ][ 'items' ][ 1 ][ i - index ]

index = index + 1

post_num = len ( get_site_data[ 'result' ][ 'items' ][ 0 ] )

article_info = [ ]

for i in range ( 0, post_num ):

tmp = {"title": get_title ( get_site_data[ 'result' ][ 'items' ][ 0 ][ i ][ 0 ][ 'name' ] ),

"url": get_site_data[ 'result' ][ 'items' ][ 0 ][ i ][ 0 ][ 'name' ],

"pv_count": get_site_data[ 'result' ][ 'items' ][ 1 ][ i ][ 0 ],

"visitor_count": get_site_data[ 'result' ][ 'items' ][ 1 ][ i ][ 1 ],

"average_stay_time": get_site_data[ 'result' ][ 'items' ][ 1 ][ i ][ 2 ]

}

article_info.append ( tmp )

get_hot_article = {"blog_general": blog_general, "article_info": article_info}

return get_hot_article

def get_title(url):

'''

补充百度分析不显示标题

:param url: 文章链接

:return: 文章标题

'''

r = requests.get ( url )

r = r.content.decode ( 'utf-8' )

html = etree.HTML ( r )

title = html.xpath ( '//*[@id="post-info"]/h1//text()' )[ 0 ]

return title

def get_visitor_province(access_token, domain):

'''

访客省份统计

:param access_token: 百度分析access_token

:param domain: 站点域名

:return: 省份UV

'''

site_info = getSiteList ( access_token, domain )

payload = {

'access_token': access_token,

'method': 'overview/getDistrictRpt',

"metrics": "pv_count",

}

payload.update ( site_info )

r = requests.post ( 'https://openapi.baidu.com/rest/2.0/tongji/report/getData', params=payload )

get_data = r.json ()

get_visitor_province = [ ]

num = len ( get_data[ 'result' ][ 'items' ][ 0 ] )

for i in range ( 0, num ):

tmp = {'name': get_data[ 'result' ][ 'items' ][ 0 ][ i ][ 0 ],

'value': get_data[ 'result' ][ 'items' ][ 1 ][ i ][ 0 ]}

get_visitor_province.append ( tmp )

return get_visitor_province

def get_visitor_counrty(access_token, domain):

'''

访客国家统计

:param access_token: 百度分析access_token

:param domain: 站点域名

:return: 国家UV

'''

site_info = getSiteList ( access_token, domain )

payload = {

'access_token': access_token,

'method': 'visit/world/a',

"metrics": "pv_count,visitor_count,average_stay_time",

}

payload.update ( site_info )

r = requests.post ( 'https://openapi.baidu.com/rest/2.0/tongji/report/getData', params=payload )

get_data = r.json ()

get_visitor_country = [ ]

num = len ( get_data[ 'result' ][ 'items' ][ 0 ] )

for i in range ( 0, num ):

tmp = {'name': get_data[ 'result' ][ 'items' ][ 0 ][ i ][ 0 ][ 'name' ],

'value': get_data[ 'result' ][ 'items' ][ 1 ][ i ][ 0 ]}

get_visitor_country.append ( tmp )

return get_visitor_country

if __name__ == '__main__':

API_Key = ''

Secret_Key = ''

CODE = ''

refresh_token = ''

|